Click to Download PDF Version

Paper review and beyond:

Introduction to the Artificial Neural Networks co-authored by Andrej Krenker, Janez Bešter,and Andrej Kos

To review the paper and understand some of the important

aspect of the paper and the topic Artificial Neural Network, I thought to have

a different approach in writing the blog, instead of giving the brief what I

have understood I thought to go through the journey of questions my mind was

throwing to me while reading and exploring this topic and the answer I got from

the paper or external research. So here is the question and answer format of

the paper review and beyond.

My first question:

What is ANN and why it exists?

As picture is worth thousand words, I will try to give as

many pictures as I thought would be relevant and that will save the readers

time to understand the concept as fast and easy.

ANN: Artificial Neural Network

Warren McCulloch and Walter Pitts (1943) created a

computational model for neural networks based on mathematics and algorithms,

this is derived from biological brain structure and its mathematical model shown

below:

Why it exists?

The whole idea is replicate the learning mechanism of

biological brain to machine, is to understand how brain learn things and create

the computer program which will learn in the similar fashion. This will help to

create self-learning program that need not be programmed specifically to do a

task, it will just observe the task being done by other and could able to

replicate the same.

Again Picture to tell long story short:

Interesting Tell me more about it, how do I create

Mathematical Model of the Brain?

To create Mathematical Model of the Brain, all you need the

followings:

1.

Dendrites -> Inputs.

2.

Synapses -> Weights.

3.

Nucleus -> Summation

4.

Axon

-> Activation function

And the Network of all above, which may look like this:

To illustrate the above with some actual

data, here is the example:

The important things to know is that how do the network learn the things and remember it. To learn is to correctly classify things, so when we incorrectly classify things then we tell ourselves what made us to do mistake, is mostly related to ability to identify the distinguishable quality of the things we are classifying, say for example to distinguish between cat or dog, we will look into structure of face and size of the body, as having 4 legs and one tail will not help us to distinguish between dog or cat, though 4 legs and 1 tail is important to identify them as a type of animal, however structure of face and size of the body will give us more relevant feature, so we are giving more importance to those features, giving more importance is nothing but mathematically speaking we are giving more weights to that input features, that means in the above model we need to adjust our weights. Next question could be, how do we adjust the weights, answer is to look for the difference between actual vs predicted, more the difference more we need to adjust our weights accordingly, the way it is done is using method called Back propagation, where derivative is been used to know the downhill where the error will reduce and the weights are updated accordingly. Weights are updated simultaneously instead of one at a time to adjust the entire network covering all the input features else we will end up in the loop of adjusting one weight and disturbing the importance of other weights. The whole back propagation formula could be discussed on separate blog.

Wait a Minute I see "b" biases, what is that and why it is required? it was no where in Biological diagram you have shown above?

The bias is necessary for adjustment of maths when there is involved positive and negative input to adjust the neurons to correctly, in short, a bias value allows you to shift the activation function to the left or right, which may be critical for successful learning. As we go details of any particular problem, that will help us to understand better.

Ok gotcha, what is Activated Function, can you explain more?

Activation function is nothing but when you give some input no matter how large or small it is will convert that into a range bound between 0 to 1, and reason it does that as it will help us to relate the number with Probability, where 1 being certain and 0 become completely uncertain. so now, for example if we input a very high negative number in the function 1/(1+e^(-x), say x equals to -10,000 then the output will be close to 0, if we input high number 10,000 then the output would be close to 1, while input x = zero will give us fifty fifty chance, that is 0.5 value.

Why this sigmoid function, can't we have different and easy function?

We use activation function (generally sigmoid function) like 1/(1+e^(-x) idea is to have the derivative which is mathematically convenient to calculate:

The sigmoid function is defined as follows

Ok is this the only type of function that can do this job?

No, we have different types of activation functions that can do similar job, please refer to below table:

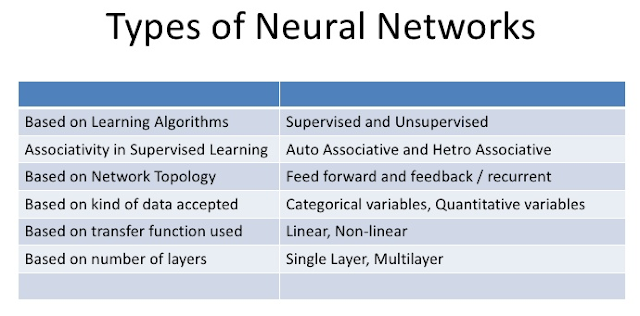

I guess, since there are different type of activation function there will be different type of Neural Network, could you tell me the different types of Neural Network?

The above example was a simple Neural Network, however, in practice their exists very different type of Neural Network and they are broadly classified based on below table:

Supervised and Unsupervised is nothing whether we have label data or we are just grouping them based on the features respectively.

Autoassociative memories are capable of retrieving a piece of data upon presentation of only partial information from that piece of data. Heteroassociative memories, on the other hand, can recall an associated piece of datum from one category upon presentation of data from another category. Hopfield networks have been shown to act as autoassociative memory since they are capable of remembering data by observing a portion of that data. Bidirectional Associative Memories (BAM) are Artificial Neural Networks that have long been used for performing heteroassociative recall. We will see below to understand this in detail.

I see the word "Network Topology", this I have read on some Internet\LAN\WAN connection type, is it same for Neural Network?

If we see types of topology exist for LAN connection, some

of them exist for Neural Network. There can by numerous type of Neural Network can

exists however if we divide the way the signal or input data flow within connection, we

can split the types into two basic connection types :

1.

Feed Forward

2.

Feed Back.(Recurrent)

Recurrent Neural Network seems to little complex, can you tell me what different types of RNN exists and more details about it?

FeedBack Network (Recurrent) can be further classified into following:

- Fully Recurrent Neural Network

- Hopfield Neural Network

- Elman

- Jordan

- LSTM

- Bi-directional

- SOM

- Stochastic

- Physical

Details of each is given below:

1. Fully Recurrent ANN:

In Fully Recurrent ANN, all the output from each activation function is looped back to input of all the neurons, this is the maximum number of back loop possible considering the number of neurons. These algorithms have (1) the advantage that they do not require a precisely defined training interval, operating while the network runs; and (2) the disadvantage that they require nonlocal communication in the network being trained and are computationally expensive. These algorithms allow networks having recurrent connections to learn complex tasks that require the retention of information over time periods having either fixed or indefinite length.

2. Hopfield Neural Network:

In Hopfield Neural Network, the units in Hopfield nets are binary threshold units, i.e. the units only take on two different values for their states and the value is determined by whether or not the units' input exceeds their threshold. Convergence is generally assured in the Hopfield Neural Network, however, it may be local minima. Hopfield Neural is one of the simplest form of recurrent neural network.

3. Elman and 4. Jordan Neural Network:

In Elman Neural Network output of hidden layer goes back to input of the hidden layer, while in Jordan the output from Output layer goes back to input of the hidden layer. The one way to understand the difference, Jordan is network taking the data from output layer which is the predicted output from the network and that goes into the input of the hidden layer after time t, giving the network more context details of the given problem against the Elman which is more toward the input feature specific details to remember and process for.

5.Long Short Term Memory

Long Short Term Memory is derived from the idea that the way we remember and forget things based on the new inputs and related output we are getting, for example, if know the curd is white color and served cold normally, and if the hot milk which has the same features like curd has been given to us, then the experience of hot milk help us to update our memory, so we forget the condition of white and liquid looking like body is not necessarily always cold and we update the new feature temperature and taste to be looked upon and remember it, that will help us to distinguish between Milk and Curd.

From the above diagram, you will see apart from normal input neuron, we find Input Gate, Forget Gate and Output Gate, using Input Gate, it is decide whether to write the data or not, then Forget Gate is used to reset the weights that is erase the memory depend upon the new Input and Output combination, while Output Gate is use for reading the data out from it, above diagram is just the single structure of LSTM, the combination of numerous such structure joined in a form of Network is used in solving the problems.

Bi-Directional Recurrent Neural Network:

BRNN can be effectively used in Language translation, as for sentence it is important that not the current word what we are reading to understand the true meaning of it however the entire sentence or paragraph before and after that word. Using BRNN, both past and future context of the data can be utilized in deriving the meaning out of it. Similarly, it is applied speech and video recognition and interpretation of actions shown in it, in short, it is useful to all those problems, where trail of information is flowing and they are interrelated to it. This type of algorithm not only help in deriving what is future (after) value but also past (before) values.

Self Organizing Map:

SOM is like grid of neurons where close by neurons has impact when the target neurons are adjusted, this has application in emotion detection mechanism as shown in the above figure where raising and lowering of the eyebrow has major impact to closer grid compare to the distant grid, same logic is applied while updating the weights of neural networks, where when the closest (target feature) weight is changed, there will be changes to nearby weights of neurons also, however it will be proportional to how close it is from the target weight (closest weight for that feature).

Physical Neural Network:

Picture shown above is the actual hardware created to replicate the Single Neural Network and if we have created the combination of it then it will form Phyical Neural Network. Currently, research is going on to use Nano technology to create Physical Neural Network that can be implement on moving Robots instead of current chips which is designed differently. This structure will be smaller and more efficient than the current processor and memory designed for personal computers for doing computation and memory management of neural network. If successful, we may see the 1200 cc of brain size occupying more neurons and memory, may be someone is dreaming to make robots like that shown in Movie called Ex-Machina.

My Brain : Thank you very much now I want to implement some of them in real life problem :)